What is Docker?

Docker is a container management service. It is used to build, test and deploy applications quickly. It separates the application layer from the infrastructure layer so that development, testing and deployment of software can be done quickly. It packages the application into units knows as containers which contains everything that the application requires to run i.e. libraries, runtime, application code and system tools. With Docker it is easier to design and develop highly scalable application following micro-services architecture patterns.

How Docker works?

As already explained earlier, docker provides a platform to package and run an application in an isolated environment known as container. So for a container this may be considered as the operating system. In a virtual machine, the hypervisor is responsible for virtualization of the computer hardware and running operating systems on it. Similarly, container engine is responsible for the virtualization of the OS Kernel. Docker provides tools and a platform to manage containers.

Why to use Docker?

Using Docker has many advantages over tradition way of managing lifecycle of an application. Those are,

- faster delivery of applications

- seamless deployment and scaling

- more workloads on same hardware

- standardized operations

- cost saving

In Docker, developer gets a standard development environment to develop the application which can be easily deployed in production or staging environments. This is because containerized applications are isolated from the host OS and underlying hardware. This allows for great CI/CD workflows.

The workloads in Docker are highly portable and so they can run on basic hardware as well as high end servers as well as mixture of both. This allows for efficient management of workloads. With Docker, it becomes easier to scale up or down applications as per the business needs.

As the Docker

Docker Engine

Before we get to the Docker Architecture, let us understand Docker Engine and its components.

Docker Engine is an opensource containerization technology for building and containerizing applications. It mainly consists of the following components:

- A server which is also known as Docker Daemon (dockerd). This process runs in the background and manager all Docker objects. It listen to the Docker API requests sent from the clients and processes them.

- An interface which the Docker client use to communicate with Docker daemon.

- A command line interface (docker) which interacts with Docker daemon using the Rest APIs. It makes the whole process of container management easier.

To learn more about dockerd and docker please refer official Docker documentation.

We will run few of the basic command of both in later posts where we will start our practical sessions.

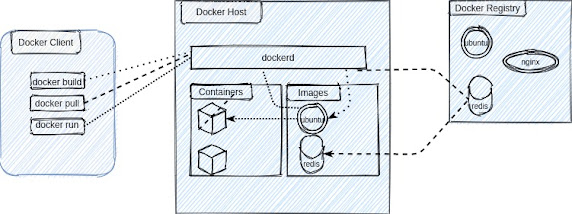

Docker Architecture

In the below diagram we can see the Docker architecture.

Docker Daemon: As we have already explained in Docker Engine section that the Docker Daemon or ‘dockerd‘ listens for Docker API requests and is responsible for managing Docker objects such as images, containers, network and volumes. It can also communicate to other Docker daemons to manage Docker services.

Docker Client: Docker client or ‘docker‘ is the primary way that users use to interact with Docker daemon. When we use command such as ‘docker build‘ or ‘docker pull‘ or ‘docker run‘, the client sends an API request to dockerd. The Docker daemon then executes the requests and sends back the response to the Docker client. One Docker client can communicate with multiple Docker daemons.

Docker Registry: Docker registry is a repository where the Docker images are stored. It can be a public repository like Docker Hub or a private repository created to store a organization specific docker images which are not intended to be made publicly available. When ‘docker pull‘ command is run the images are downloaded from the docker repository into the configured images directory of the host OS where Docker daemon is running. If ‘docker run‘ is executed it first checks the local images if the required images are available. If it is available then the container is created using the same image else it is downloaded from the repository and then the containers is created using the same image.

Docker Objects: When using Docker, we create images, network, containers and volumes which are categorized as Docker objects.

Images: Images are read-only templates with instructions for creating a container. Images are build using a set of instructions on a Dockerfile. We will get more on the Dockerfile in upcoming posts. Sometimes one image is based on another image with some addition customization on top of the base image. Each instructions inside a Dockerfile creates a layer within the image. When same image is again updated using updated instruction in the Dockerfile only those layers which has been modified are rebuilt again for the new image creation. This is what makes Docker images lightweight and fast compared to other virtualization technologies.

Containers: Containers are running instances of an image. Using Docker API or CLI we can create, start, stop, delete containers. We can connect containers to one of the Docker networks, attach persistent storage volumes and can also create a new image of the current running container. Containers are by default isolated from other containers and its host machine which makes it secure. Docker users are responsible to manage the connection of Docker container by create separate docker network and assigning the same to those container which need to communicate with each other.

Network: Docker networking is a big area which will be covered in later posts when we will start doing practical session. But on high level Docker networking is handled by using different docker network drivers which are as follows:

- none

- bridge

- host

- overlay

- macvlan

Storage: Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. Similar to network this will be explained in detail when we start practical session on data persistence in a container.

With this we have covered the theory of Docker for beginners. We will start doing practical examples from our next post on Docker.

No responses yet